- Jh123x: Blog, Code, Fun and everything in between./

- My Blog Posts and Stories/

- Golang Profile Guided Optimization (PGO)/

Golang Profile Guided Optimization (PGO)

Table of Contents

What is Profile Guided Optimization (PGO)? #

Profile Guided Optimization (PGO) is a compiler optimization technique that uses the profile data generated by running the application to guide the optimization process. This allows the compiler to make better decisions on how to optimize the application.

This feature is available from Go 1.20 onwards. Based on their official website, this feature can speed up our go application by 2%-7%.

Introduction #

In this blog post, we will learn how to use the Profile Guided Optimization (PGO) feature in Golang and some of its behavior.

To give it the best possible chance, I will be using the latest version of go (1.22).

The Experiment Setup #

Application #

The application that we will be using is a simple web server that does the following.

- Register (

Create) - Login (

Read) - Update Profile (

Update) - Delete Account (

Delete)

This represents the basic CRUD operations that are commonly used in web applications.

These APIs will be implemented using the standard net/http package.

You can find the source code for this experiment in the Source Code Repository.

To remove I/O bottlenecks, we will be using an in-memory map to store the data.

Stress Client #

We will also be developing a client application that will stress the server application by sending a large number of requests to the server. We can adjust this to create a different profile for the server application.

Based on the different distribution of requests, the profile data will be different. This will allow us to see how the profile data affects the optimization process and the items that are optimized.

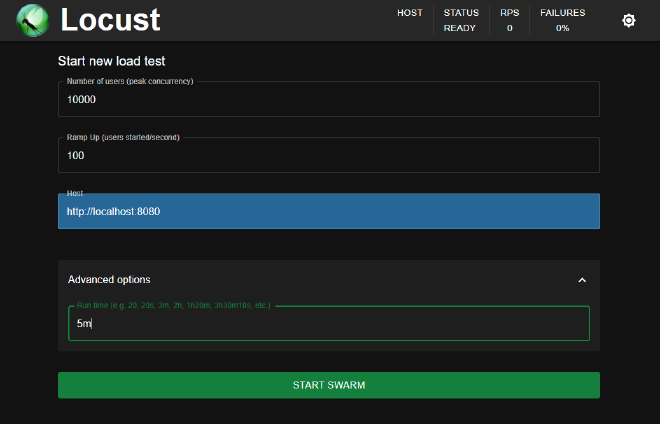

We will be using locust to create the stress client application in python.

It is a simple way to create a stress client application that can be used to stress test the server application within a short number of time.

The Experiment #

For each of the profiles that we generate, we will be running the following steps.

- Run a stress test profile with

10000clients and1000users increase per second until the requests per second has reached a steady state. - Run the stress test a total of 5 times and get the average across runs

To prevent the profiler from affecting our results, it will be removed when compiling the application for the final testing.

Stress test parameters #

For the stress test, we will be using Locust to create the stress test client application.

It will also be an even distribution of requests spread between each of the endpoints (25% of each endpoint)

We will be using the following command to run the locust stress test.

locust -f stress_test/locust_uniform.py

Setting Up the Profile Guided Optimization (PGO) #

Other than the default build, we will be using the following 2 profiles to build the application.

- Uniform Distribution between the 4 APIs (25% each)

- Read Heavy Distribution (85% Read, 5% Create, 5% Update, 5% Delete)

For these 2 profiles, the aim is to take a look at how the profile data affects the optimization process and the items that are optimized.

The profiling will be collected over 60 seconds from using the command below

curl -o ${name}.pgo "http://localhost:8080/debug/pprof/profile?seconds=60"

The Uniform distribution shows a workload that is optimized exactly for the stress test. The Read Heavy distribution shows a workload that is not optimized for the stress test.

To setup each of the profiles, we will be using locust and distributing the requests based on the following percentages.

For the details of the locust script, you can visit the Appendix section.

The Results #

| Profile (Used to build with PGO) | Average Response Time (ms) | Median Request Rate (req/s) |

|---|---|---|

| No PGO | 33.31ms | 965rps |

| Uniform Distribution | 27.8ms | 977.8rps |

| Read Heavy Distribution | 1038.33ms | 995.1rps |

Analysis #

Based on the observations that we have seen from the results, we can see that the profile data has a significant impact on the optimization process.

By optimizing for the workload correctly, we can see that the application is able to perform better than the default build.

However, if the profile data is not representative of the workload, the application can perform a lot worse than the default value.

Conclusion #

For anyone who is looking to squeeze out the last bit of performance from their application, Profile Guided Optimization (PGO) is a good way to do so.

However, it is important to note that the profile data that is used to optimize the application is representative of the workload that the application will be handling.

Otherwise, the application can perform worse than the default build.

There are some limitations of this blog post that should be noted.

- The application is a simple web server that does not represent all web applications.

- The duration of the stress test may not be long enough to get a good profile of the application.

Maybe in the future, we can do some similar optimizations using go benchmarks on different algorithms and see how the profile data affects the optimization process.

Useful Links #

Appendix #

Profile 1: Uniform Distribution #

For the uniform distribution, we will be using the following locust file

from locust import FastHttpUser, between, task

from random import randbytes

from queue import Queue

class WebsiteUser(FastHttpUser):

wait_time = between(5, 15)

user_queue = Queue()

def on_start(self):

# Register a user to be used in the login task

self.client.post(

"/register", {"username": "foo", "password": "bar", "email": "test@test.com"})

@task(25)

def login(self):

self.client.post(

"/login", {"username": "foo", "password": "bar"})

@task(25)

def register(self):

rand_username = randbytes(10).hex()

rand_password = randbytes(10).hex()

rand_email = randbytes(10).hex() + "@test.com"

self.client.post(

"/register", {"username": rand_username, "password": rand_password, "email": rand_email})

self.user_queue.put(rand_username)

@task(25)

def update_profile(self):

rand_email = randbytes(10).hex() + "@test.com"

self.client.post(

"/profile", {"username": "foo", "email": rand_email})

@task(25)

def delete_profile(self):

self.client.post("/delete", {"username": self.user_queue.get()})A

Profile 2: Read Heavy Distribution #

For the read heavy distribution, we will be using the following locust file

from locust import FastHttpUser, between, task

from random import randbytes

from queue import Queue

class WebsiteUser(FastHttpUser):

wait_time = between(5, 15)

user_queue = Queue()

def on_start(self):

# Register a user to be used in the login task

self.client.post(

"/register", {"username": "foo", "password": "bar", "email": "test@test.com"})

@task(85)

def login(self):

self.client.post(

"/login", {"username": "foo", "password": "bar"})

@task(5)

def register(self):

rand_username = randbytes(10).hex()

rand_password = randbytes(10).hex()

rand_email = randbytes(10).hex() + "@test.com"

self.client.post(

"/register", {"username": rand_username, "password": rand_password, "email": rand_email})

self.user_queue.put(rand_username)

@task(5)

def update_profile(self):

rand_email = randbytes(10).hex() + "@test.com"

self.client.post(

"/profile", {"username": "foo", "email": rand_email})

@task(5)

def delete_profile(self):

self.client.post("/delete", {"username": self.user_queue.get()})